Understanding the mathematical foundations of Deep Learning is crucial to mastering neural networks and optimization techniques. At the core of this field lie computational graphs and automatic differentiation, which enable efficient gradient computation and optimization in modern machine learning models.

This article introduces the concept of computational graphs and explores the automatic differentiation techniques used to compute gradients, including forward mode differentiation and reverse mode differentiation (backpropagation). These methods are essential in training neural networks and are widely implemented in frameworks such as PyTorch.

This article is part of the Deep Learning Foundations series, which covers fundamental topics essential for understanding deep learning. If you are looking for a structured introduction to deep learning concepts, check out Deep Learning Foundations.

Now, let’s dive into computational graphs, differentiation techniques, and their role in training neural networks.

Consider a function that depends on some parameters

:

. We have a set of input-output pairs,

. For each input-output pair, we can define an error function

that measures the difference between the output of

and the actual value

. Specifically, the error function can be expressed as:

Our goal is to find the value of that minimizes the error

on average:

One effective way to find the optimal parameters is by using the Stochastic Gradient Descent (SGD) method.

Gradient Computation

To minimize the error, we need to compute the gradient of the error with respect to the parameters, . The expected value of this gradient,

, has the same dimensionality as

and points in the direction that maximizes

. To minimize

, we take steps in the opposite direction of the gradient:

where is the learning rate, which controls the step size.

Methods to Compute Gradients

There are several ways to compute the gradient :

- Manual Differentiation: This involves computing gradients by hand. While feasible for simple functions, it becomes impractical for complex or deep networks.

- Symbolic Differentiation: This method uses symbolic algebra systems to compute exact derivatives. However, it can be computationally expensive or even impossible for very large models.

- Automatic Differentiation (Autograd): This is the preferred method in machine learning. It combines numerical efficiency with the ability to handle complex models.

A Simple Machine Learning Model

A simple machine learning model can be represented as a sequence of layers:

This can be formalized as:

This operation is called function composition and can also be written as .

Chain Rule and Backpropagation

For a composition of functions, the derivative can be computed using the chain rule. For example, consider:

The derivative is given by:

Unfolding the equation for :

The derivative of with respect to

is then:

This process, which involves multiplying the partial derivatives, is fundamental to backpropagation in neural networks.

Binary Operators

A binary operator is a rule for combining two elements (called operands) to produce another element

- Addition

- Multiplication

- Subtraction

- Division

Every binary operator cause a split in the derivation flow. In every closed binary operator create a summation of two derivation flows:

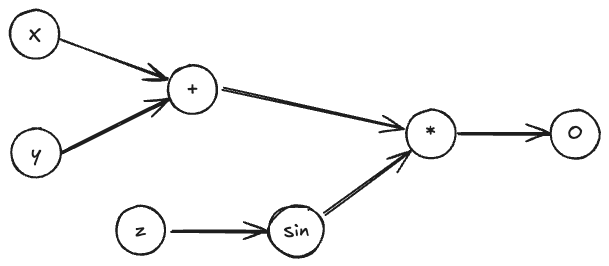

Computational Graphs

Computational graphs are a powerful tool for visualizing and performing differentiation on complex functions. Each node in the computational graph represents an operation, and the edges represent the flow of data between these operations.

Binary Operator Differentiation

Binary operators such as addition, subtraction, multiplication, and division play a crucial role in computational graphs. When differentiating a computational graph that contains binary operators, each operator creates a split in the differentiation flow. Consider the following function:

For binary operators, we treat the differentiation as follows:

- Addition (+): The derivative of the output with respect to each input is simply

. For example, the partial derivative

and

. This means that when backpropagating, the gradient is distributed equally across both inputs.

- Subtraction (-): The derivative with respect to the first operand is

, and with respect to the second operand is

. For example,

and

. During backpropagation, the gradient flows positively to the minuend and negatively to the subtrahend.

- Multiplication (

): The derivative depends on both inputs. The partial derivative with respect to is the value of

, and vice versa. For example,

and

. This means that during backpropagation, each input receives the gradient multiplied by the value of the other input.

- Division (

): The derivative with respect to the numerator is

, and with respect to the denominator is

. For example,

and

. During backpropagation, the gradient is scaled by the reciprocal of the denominator for the numerator, and scaled negatively for the denominator.

Each binary operator in the computational graph results in a split in the flow of derivatives, which must be summed accordingly when propagating back through the graph. Note that, if there are some coefficients, such as , the representation in the computational graph can be simplified by considering the coefficient as a separate node in the graph.

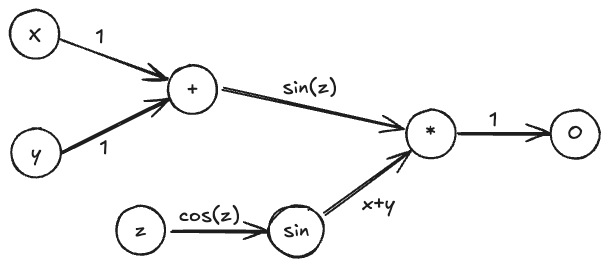

Forward Differentiation

In forward mode differentiation, the derivatives are propagated from inputs to outputs, following the flow of the computational graph. This method is particularly useful when the number of inputs is smaller than the number of outputs, as it allows efficient computation of all partial

derivatives with respect to a single input.

Consider the same example:

Let’s proceed by building the computational graph, writing down the partial derivatives at each step (on each edge, it is written the derivative of the successive node with respect to the previous node):

Here is how the differentiation proceeds step by step:

- At the addition node (+), we propagate the partial derivative of

with respect to

and

, which are both

. The output of the addition node has a derivative of

with respect to each of its inputs.

- At the sine node (

), the derivative with respect to

is computed as

. This value is then used in the next step to propagate through the multiplication node.

- At the multiplication node (

), we apply the chain rule. The partial derivative with respect to the output of the addition node is

, and the derivative with respect to the output of the sine node is

. The derivative value is propagated through the edges to the final output node.

The forward mode differentiation allows us to compute the derivative of the output with respect to each input parameter in a single pass through the computational graph. Given the partial derivatives at each step, we can efficiently compute the gradient of the output with respect to all input parameters. In the following, the computational graph is represented to show the flow of derivatives from an input to the output. The derivatives are computed at each step and propagated forward through the graph.

We obtain that the derivative of the output with respect to is

.

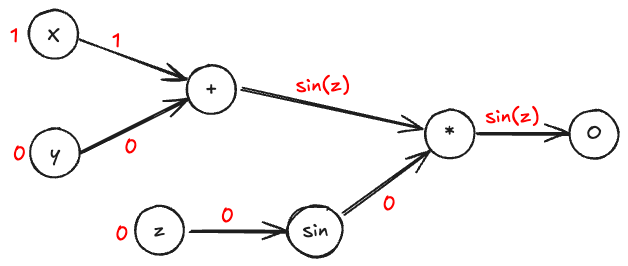

Reverse Differentiation

Reverse mode differentiation, also known as backpropagation, is more efficient when dealing with functions where the number of inputs is much larger than the number of outputs. In machine learning, this is often the case as models may have thousands or millions of parameters (inputs) but produce a single scalar loss (output).

Consider the same function:

In reverse mode differentiation, we begin by computing the derivative of the final output with respect to itself, which is . We then propagate this derivative backward through each node in the graph:

- At the multiplication node (

), we distribute the gradient to each of its inputs. The derivative with respect to

is

, and the derivative with respect to

is

.

- At the sine node (

), we propagate the gradient back by multiplying it with the derivative of

, which is

.

- At the addition node (+), the gradient is equally split between

and

since

and

.

The process continues until all input nodes have received their respective gradient contributions. This allows us to efficiently compute the gradient of the output with respect to every input parameter in a single backward pass. The computational graph for reverse differentiation can be represented as follows:

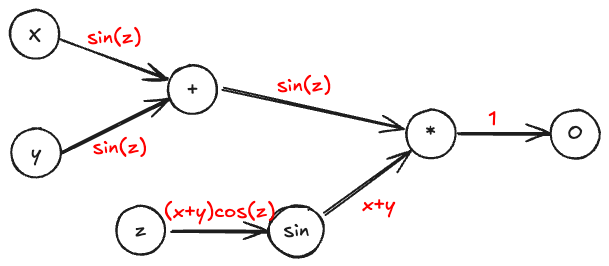

If we want to compute the derivative of the output with respect to , we can follow the reverse differentiation process:

Or, if we want to compute the derivative of the output with respect to :

This representation clearly shows how the gradients flow backward through the computational graph, allowing efficient computation of all required derivatives.

Numerical Differentiation Frameworks

There are several numerical differentiation frameworks available that utilize reverse mode differentiation under the hood. These frameworks provide high-level APIs to easily construct complex machine learning models. Popular frameworks include:

These frameworks allow users to define computational graphs and automatically compute gradients, making it easier to implement and train deep learning models.

import torch

import math

x = torch.tensor([math.pi], requires_grad=True)

y = torch.tensor([math.pi], requires_grad=True)

o = (x + y) * torch.sin(x)

o.backward()

print(x.grad) # Output: tensor([-6.2832])

print(y.grad) # Output: tensor([-8.7423e-08])

Lascia un commento